My name is Alex Ionkov. Twitter GitHub LinkedIn

About >Vision Smartphone Passthrough Glasses

3D printed wearable Google Cardboard style glasses for use with smartphone.

Currently compatible with WebXR (WebVR), Aframe, Google Cardboard. Fits the iPhone 11, X or any phone of similar dimension (~5.95" by ~2.95" by ~0.35"). I am currently using Google Mediapipe and Tensorflow for the hand tracking computer vision and Three.js for rendering. The goal is to create essentially a cheap Hololens using just a smartphone, a 3D printed part, and computer vision. The website is at ionkov.com/vision and the Github repo is here.

I post regular updates to my Twitter @i0nif.

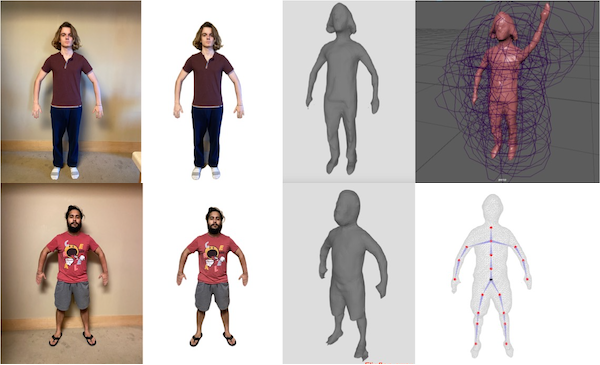

Rigme.io

My friend, Jas, and I created rigme.io(now dead x/), which allowed you to take a picture of yourself with your webcam and we would send you an email back with a 3D rigged model of you. We used the PiFuHD model and Blender to do the rigging. The Github repo is here.

Kodi (XBMC)

I was accepted to Google Summer of Code @ Kodi(XBMC). My mentor, Razzee, and I spent the summer writing a new web interface for Kodi in Elm called elm-chorus. Elm-chorus supports live playback and organization of media over web sockets using the JSON-RPC API. The Medium reflection I wrote is here and the Github repo is here.

more projects:

posenetToThreejs: I connected Tensorflow.js Posenet to a Three.js Humanoid Model using SocketIO. The pose keypoints are then mapped to the humanoid rig so that you can control it with your body. 2020.

GraphicsTown: CS559 Computer Graphics Final project. I used Three.js to create a bustling island town with a trolley that travels on a spline.

Transpos: Transpos is an app written for iOS in Swift which would recognize an image and display a respective 3D model over them. My friend Jas and I made it using Firebase to map images to 3D models and ARKit for the augmented reality aspect. 2019.

PysBioNetFit: Collaborated in a three-person team that developed an application for fitting models to experimental data in C++ and in Python. Wrote parts of custom syntax parser in Python for BNGL language. Created graphical user interface for PyBioNetFit in PyQt 5.

IgE Receptor Signaling: My partner and I studied the allergy signal cascade by characterizing the IgE receptor in a mast cell. I wrote a computational model in BioNetGen simulating the interacting subunits which was used to predict certain rate constants and fit it to experimental data with BioNetFit. Qualified for ISEF 2016 in Phoenix, 2017 in Los Angeles.

Refreshable Braille Display: A single character braille display was created using memory wire which would pull or release pins based on input from an Arduino. 2015.

Headtracking: controlling your computer with your head: Using the Wiimote, and safety glasses with infrared LEDs, you could control your mouse pointer by moving your head and tilting it. 2013.

3D Modeling/Animation Work

publications:

- Eshan D. Mitra, Ryan Suderman, Joshua Colvin, Alexander Ionkov, Andrew Hu, Herbert M. Sauro, Richard G. Posner, William S. Hlavacek, PyBioNetFit and the Biological Property Specification Language, iScience, Volume 19, 2019, Pages 1012-1036, ISSN 2589-0042, https://doi.org/10.1016/j.isci.2019.08.045.

- Hlavacek, W. S., Csicsery-Ronay, J. A., Baker, L. R., Álamo, M. D. C. R., Ionkov, A., Mitra, E. D., ... & Thomas, B. R. (2019). A step-by-step guide to using BioNetFit. In Modeling Biomolecular Site Dynamics (pp. 391-419). Humana Press, New York, NY.

- Mitra, E., Suderman, R., Ionkov, A., Hlavacek, W., & National Institutes of Health. (2018). PyBioNetFit. Los Alamos National Lab.(LANL), Los Alamos, NM (United States).